After my eventful night of dealing with Ubiquity’s short-sighted decision to remove docker/podman from the dream machine, I’ve had to move my network topology away from the UDM and fleet of UDM devices. I have completed the migration of services and I wanted to go over the topology as it is now.

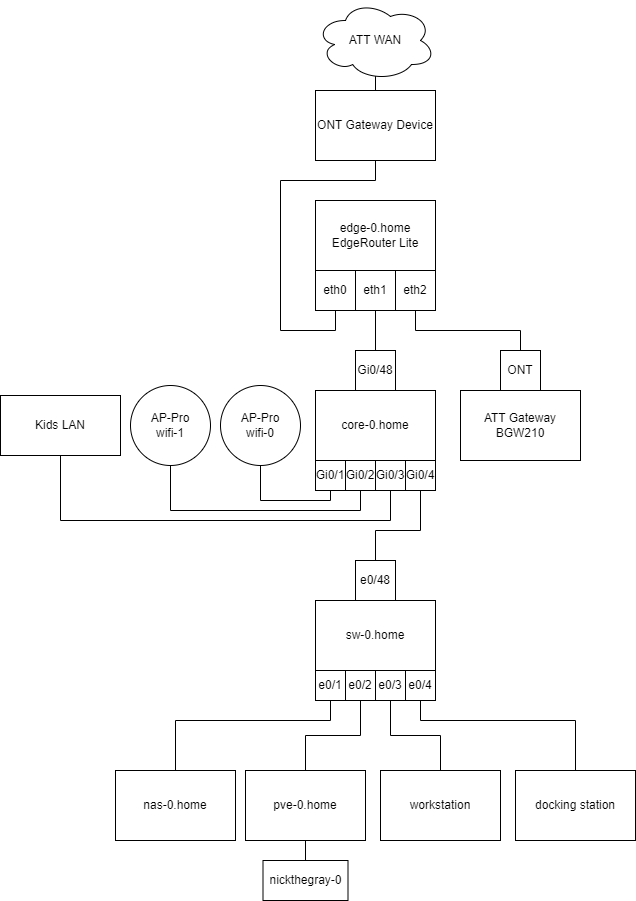

Let’s start with a quick diagram of the network as it is today:

The above diagram shows a logical overview of the network paths within my home network. I will break down each component in the topology and give a general overview of its purpose starting with the edge-most device and work my way down the stack.

The device labeled “ONT Gateway Device” is a device provided by ATT to convert the fiber media to cat5e. This device is powered by a DC battery backed power supply that is inside my shop room in the basement. Since I do not have much management over this device but I do have physical access to it, I typically consider this the “edge-most” device in my topology.

Downstream from this device is my EdgeRouter Lite. I purchased this device years ago and it was my edge routing device for quite some time before I was swept up in the shiny features the UDM offered. In my honest opinion, the EdgeRouter was one of Ubiquity’s best line of products. The vyatta-like configuration, feature-rich hardware, and small form factor made it a hard device to replace over the years.

In order to authenticate with ATT’s network services, the EAP gateway is employed to send 802.11x auth requests over to eth2, which is connected to the commercial BGW210 device ATT provided many years ago. The UDM was able to replace this component requirement as a WPA Supplicant container could be ran on the UDM to authenticate directly. This was a driving factor to migrate away from the EdgeRouter Lite and unfortunately a short-lived driving factor.

Downstream from the EdgeRouter is a Cisco Catalyst 4948 configured with SVI’s to route between VLAN’s within the network. This is the distribution point for clients as well within the network. Wifi and rooms in the house are connected to this switch and configured accordingly.

Further downstream from core-0, I have deployed a Nexus 3k for 10G connectivity between my NAS and hypervisor running proxmox. This Nexus 3k replaced a ubiquity 10G aggregation switch. I had to learn a good amount of (old) information to configure this Nexus 3k. One thing I appreciated was the similarity of commands between Catalyst and Nexus. I suppose the 3k was early enough in Cisco’s lifetime before they lost their way. Rest in peace Cisco, you were really cool once.

Diving down deeper in the network, I have my proxmox host which acts as a bit of a switch on its own. Utility VM’s are deployed here (you’re reading from one right now).

In the coming weeks, I would like to explain more about what I did to deploy these services as well as some of the weird headaches I had to work through to maintain uptime my family has grown accustomed to. DevOps as a lifestyle doesn’t have work life balance. It is life.